There is an immense amount of noise in the world about how you can, should, and should not use new AI tools. I don’t find most of these practical, so I am going to write my own for you.

There are four common ways to use LLMs with text, which I want to tackle:

- Generating Text

- Revising Text

- Summarizing Text

- Retrieving Information from Text

There’s nuances with using AI for each of these, but some ways you can get around those issues. Before we dive in though, any screenshots you see here are going to be utilizing Anthropic’s Claude tool, but these techniques work broadly with most/any of the options out there. While I personally think Claude handles this type of work better, the practical differences between Claude, ChatGPT, Mistral, Gemini, and Llama are fairly minimal so use what you have.

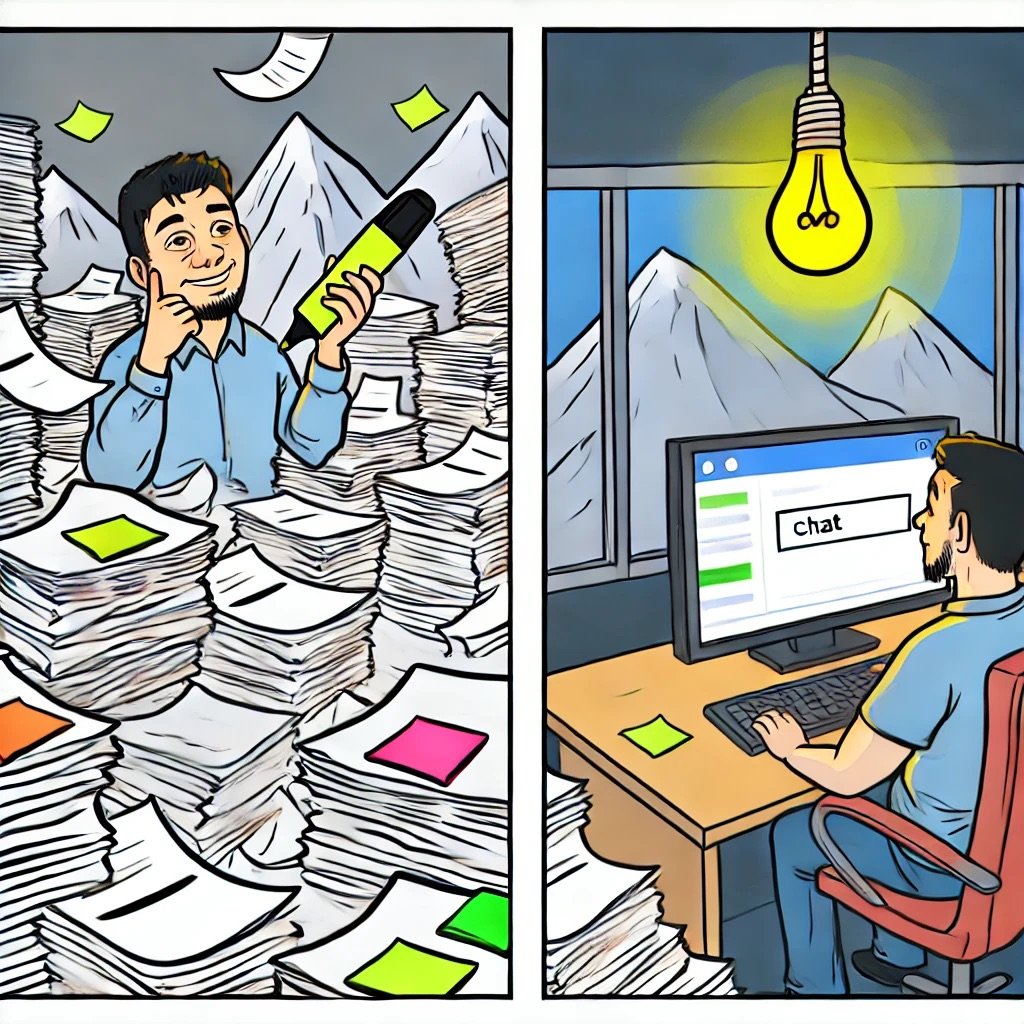

Generating Text

This is one of the trickiest ways to use an LLM, but perhaps the most common. When you hear about LLMs making stuff up (aka hallucinating) it’s generally for stuff like this. My general recommendation is that if you are going to use an LLM to generate text from a prompt (say an email you are struggling to write) then don’t copy and paste the generated text. Instead use that as a template, and thus a better way to use an LLM to generate text is not to generate text, but to ask for an outline, or key things you could/should include.

That said, there are times when letting the LLM do the work will make the most sense. For those times there are two ways to get the best possible outcome:

- Give the LLM a lot of context and instruction.

- Anchor the LLM to source material.

I’ll break down each technique, and it goes without saying, but the best quality would be to combine both of these techniques at once.

Ok first an example, let’s have an LLM write an email to a friend we have not seen in a while:

That’s a pretty shit email, right? Imagine getting that from a long lost friend. But we should expect it to be bad because we gave the LLM only a single data point to work off of: “old friend”. Let’s add far more context and instruction and look at how things change:

The wild thing here is that this text mostly seems human in nature (which is where LLMs excel) but might not actually sound like you.

That kind of text generation is really common, and the longer and more detailed you prompt, the higher quality the output will be (that was from Claude 3.5 Sonnet). But you start to run into diminishing returns, because if you are spending so much time writing a detailed prompt, couldn’t you write the email yourself? I think so.

Which is why it’s more interesting when you generate text, that you anchor generated text with source material. A common practice right now is to have an LLM write a cover letter for you, so you could have it spit out something generic like we prompted above or you can do something with what I call anchoring the prompt with source facts. To do that for something like a cover letter you need two things: job description, and a resume. For the sake of example, I’ve PDF’d an article view of Bill Gates’ Wikipedia page, and am using a job description from the Gates Foundation for a ‘Deputy Director, Foundation Strategy Office. Here’s how that goes:

That’s pretty solid. What is happening here is that the LLM is effectively matching who you are, with what the job is, and then drawing that connection in the letter. This type of use is powerful, because while this is LLM generated, it’s highly specific to the role. Can people sniff this out? Probably? But the point I want to make isn’t about cover letters and whether this is a good or bad idea, but rather that if you give an LLM two data sources and have it generate text based off both of those sources, it’s pretty damned good — and exceedingly fast.

(Generative AI detection is not cut and dry, there’s a lot of article out there claiming people detect it using XYZ things, but end of the day any company filtering automatically by this is just as likely to flag a well written by a human cover letter as they are a true AI one.)

So while it writing the email to the long lost friend isn’t full of that many time savings, writing a cover letter with that type of matching, does offer loads of time savings as it would be tedious to do that manually — and you might miss connections as boredom sets in (hiring is broken).

Revising Text

Ok, next is the revision of text. There’s a lot of tooling around this. There’s grammar checkers, and there’s style checkers, and tone checkers. But all of them are more or less using the same underlying foundation models, so using one of the major LLMs is going to yield as good or better results. But there’s three areas where LLMs really help with text revision: Tone Adjustment, Weak Arguments, and Length.

Tone Adjustment: simply give the LLM your text, and ask it to make something more polite, professional, casual, etc and it will go to work for you. Let’s pick on Apple for this, here’s the first paragraph from this news release:

Starting with iOS 18.1, developers will be able to offer NFC contactless transactions using the Secure Element from within their own apps on iPhone, separate from Apple Pay and Apple Wallet. Using the new NFC and SE (Secure Element) APIs, developers will be able to offer in-app contactless transactions for in-store payments, car keys, closed-loop transit, corporate badges, student IDs, home keys, hotel keys, merchant loyalty and rewards cards, and event tickets, with government IDs to be supported in the future.

Let’s make that sound more casual and friendly:

Prompt: I am writing a press release for a massive company,

I would like to make it sound more casual and friendly,

here’s my opening paragraph, please rewrite it:

Hey there! We’ve got some exciting news for iPhone users and app developers. With iOS 18.1, we’re opening up a whole new world of possibilities. Imagine using your iPhone for all sorts of contactless transactions, right from your favorite apps – and we’re not just talking about Apple Pay or Apple Wallet.

Want to pay for your coffee, unlock your car, hop on the subway, or flash your work ID? There’s going to be an app for that! Our new NFC and Secure Element tools will let developers create safe, easy-to-use contactless features for all kinds of things – from loyalty cards to concert tickets. And stay tuned, because we’re working on adding government IDs to the mix too!

Or make it into a poem:

Prompt: Actually, scratch that, please make it into a poem

In the palm of your hand, a world unfolds,

iOS 18.1, new stories to be told.

No longer bound by Wallet or Pay,

A tap of your iPhone opens the way.

NFC and Secure Element, hand in hand,

Developers' dreams across the land.

Coffee shops and subway rides,

Car keys and office IDs - no more divides.

Loyalty cards and concert nights,

Hotel access and home delights.

A future where your ID's digital too,

All from your iPhone, safe and true.

So here's to progress, here's to ease,

To contactless wonders, if you please.

iOS 18.1, a new frontier,

The future of transactions is finally here!

So yes, this is one of the highest value items. In my experience the best way to go about is to actually ask the LLM what the tone sounds like, and let the LLM know what tone you want, and then ask for suggestions to bridge that gap. So that would be something like “Here is some text, I’d like it to sound professional but casual, can you analyze the current tone and let me know what it current sounds like, and make suggestions for how I might make it sound more like what I want?” That will keep it from rewriting the text, help you learn, and retain your voice.

Weak Arguments: Another interesting text revision is to ask an LLM to critique the quality of your argument. I’ll pick on myself here with a recent LinkedIn post of mine and ask the LLM to critique the arguments:

Again, the key here is rather than having the LLM rewrite the post, which then it’s not really my thinking, I have an on demand reader who will instantly tell me what I am not seeing or thinking of as the writer. But keep in mind, if you ask the LLM to find something, it’ll often find something. And here LLMs matter, as each is going to find different things. This is not a game where you can keep doing this until the LLM says it is perfect, so you have to pick and choose your battle and decide what matters.

Length: for this I’ll take my sample text from Wikipedia’s entry on Quantum Thermodynamics so we are all as utterly lost as each other, and I am going to ask the LLM to shorten this text, making it more concise without losing the context.

This is really when you are showing a poor use, I didn’t know this wouldn’t be condensed well because I don’t know what the fuck this paragraph is talking about. But let’s keep pushing the LLM on it:

I don’t know the source material well enough to know if this is good or not, which is the point in me picking this topic. If you don’t know the content of what you are asking the LLM to revise, then you need to be very careful because it might end up omitting key things. So when you ask the LLM to shorten something, I use it as a guide, and example of what seems extraneous and wording choices — not as a copy and paste product. There’s no reason to ask it to lengthen things, no one wants that. The best use case for this is to get ideas about how you might summarize things, but generally this is a rough use case.

Summarizing Text

A lot of people are investing heavily on having LLMs summarize things for them. Apple is putting this at the forefront of iOS, but since that’s not out, we will have to keep with the current LLMs to look at summarization of long text.

But, it’s almost not worth it. Because LLMs are not very good right now at high quality summarization. They can miss the important details, the things which a human summarizing something would not miss. While at the same time, there’s been research showing the LLMs focus heavily on the start and end of text, and ignore the middle when they summarize. Now, this is yet another area of an LLM where a good prompt will go a long way to getting a better summary. I am going to ask the LLM to summarize my Best List from 2022 which has a little over 30 products I picked as the best and is extremely long. (To do this I converted to a PDF by ‘printing’ the webpage.)

Summary:

That gets you the gist of what is going on, but I don’t think that this is a good summary. It’s not really how a human would summarize this page. Let’s take another stab, and since we know this involves products, let’s not ask for a generic summary, but a key item list:

The LLM kept going and returned every item. But that’s just a list, nice, but not really summarizing shit. Let’s try a different tactic. Here you can see how I told the LLM what is going on, and a clear structure for what I want from it:

Now, it clearly missed some stuff since there should be 30+, but that’s the core of the issue with LLM summarization: it misses stuff. There’s no way around it. Just know that, keep that in mind, and you can still make plenty of use out of these tools for summarization. Cliff’s Notes, they are not.

Retrieving Information from Text

One of the use cases where LLMs can help a lot is as a form of advanced search for text. This is effectively what Perplexity is doing with the web at large. They find the pages on the web which match your query, aggregate them together, then summarize and display what sounds like the answer (which is also why it can be wrong at times too). But you can do this easily, upload a large body of text, or PDF/File and start asking the LLM to find things within it.

This is fantastic for legal documents, or really lengthy files where the answers might not be in a single page, and instead need to be tied together. But it’s imperfect. To look at this let’s upload the Supreme Court rulings for Harrington v. Purdue Pharma and ask questions about it:

Using a tool one file at a time like this can be a touch annoying, which is why you are seeing more and more commercial offerings coming out to allow you to query all your files in this manner. They effectively work in a similar manner to Perplexity. They first find the relevant documents, then the parts of that document, then they formulate the answers. Which is where the real risk is, because LLMs can miss things, but you can also be practical and get value.

If it’s a document you don’t understand well, and is really long so you are unlikely to ever read it, then you are probably better off using the LLM to query that document while keeping in the back of your mind you might be getting slightly wrong information.

Legal contracts are areas where an LLM can sift through and find you a lot of interesting information. Let’s take a blank lease template for California:

For a lot of people, reading these types of documents can be awful, so they don’t read them. Instead, as you can see here, the LLM can answer questions even before you have filled in the fields. So one good way to use an LLM is to feed it a document and ask the questions you want before signing — it won’t replace a lawyer in any way, but I also don’t think most people have these documents reviewed by a lawyer. User be ware I guess.

If you take away one thing from this, it’s that asking the LLM things, rather than using it to generate copy and paste text, is almost always a better use case.

That’s as quick as I can make the overview, hope it helps.

There’s other uses which are fun, so maybe next time.